Abstract

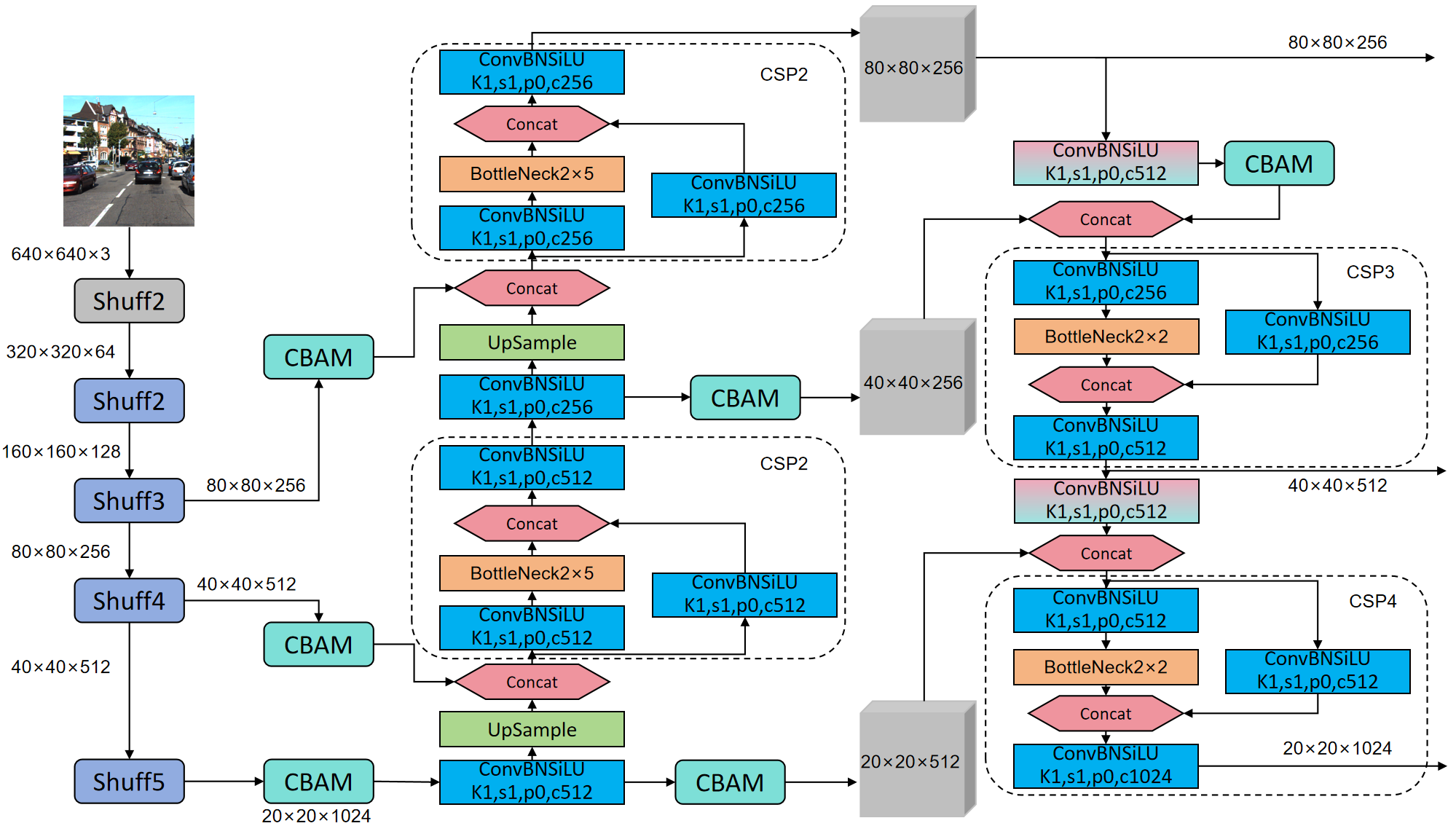

With the rapid development of autonomous driving technology, the demand for real-time and efficient object detection systems has been increasing to ensure vehicles can accurately perceive and respond to the surrounding environment. Traditional object detection models often suffer from issues such as large parameter sizes and high computational resource consumption, limiting their applicability on edge devices. To address this issue, we propose a lightweight object detection model called YOLOv8-Lite, based on the YOLOv8 framework, and improved through various enhancements including the adoption of the FastDet structure, TFPN pyramid structure, and CBAM attention mechanism. These improvements effectively enhance the performance and efficiency of the model. Experimental results demonstrate significant performance improvements of our model on the NEXET and KITTI datasets. Compared to traditional methods, our model exhibits higher accuracy and robustness in object detection tasks, better addressing the challenges in fields such as autonomous driving, and contributing to the advancement of intelligent transportation systems.

Keywords

autonomous driving

object detection

YOLOv8

real-time performance

intelligent transportation

Data Availability Statement

Data will be made available on request.

Funding

This work was supported without any funding.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Yang, M., & Fan, X. (2024). YOLOv8-Lite: A Lightweight Object Detection Model for Real-time Autonomous Driving Systems. IECE Transactions on Emerging Topics in Artificial Intelligence, 1(1), 1–16. https://doi.org/10.62762/TETAI.2024.894227

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Copyright © 2024 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (

https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

Copyright © 2024 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2024 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.