IECE Transactions on Intelligent Systematics

ISSN: 2998-3355 (Online) | ISSN: 2998-3320 (Print)

Email: [email protected]

Bird strike incidents have always been a challenge for the civil aviation industry. With the vigorous development of the civil aviation industry and the sharp increase in passenger flow, the frequency of bird strike incidents worldwide has been on the rise, and the safety of the aircraft operating environment has received widespread attention [1, 2]. It is of great significance to effectively monitor the birds in the low-altitude areas of the airport to avoid the threat to civil aviation safety, especially the effective supervision of the aircraft runway and the area within 60 meters vertically, which is an urgent and important problem to be solved to ensure civil aviation safety [3].

Among the methods for detecting birds at airports, manual observation has poor real-time performance and low accuracy, and acoustic detection equipment is prone to interference from environmental noise [4]. Reference [5] uses radar equipment for detection, but when the distance is relatively close, the resolution ability is limited and it is difficult to detect accurately. Reference [6] conducts bird detection through background subtraction and tracking corresponding points. It relies on background environment modeling and the detection is easily affected by light changes. Reference [7] uses the sliding window and convolutional neural network to locate and detect birds, but the detection process takes a long time. Reference [8] adopted the attention mechanism to improve YOLOv5 for the detection of bird targets in airport mobile devices. However, due to its limited operating memory, it is unable to handle large-scale bird flock detection. Reference [9] proposed a bird detection method for image preprocessing in a fully dynamic environment, but this algorithm is greatly affected by changes in illumination. Reference [10] uses the inquiry query mechanism to detect the existence of targets in the current frame, which has a relatively high computational cost. Reference [11] can achieve the detection of flying birds, but has a poor ability to adapt to the size changes of flying birds. To sum up, this study adopts a relatively advanced deep learning algorithm for airport bird detection, and it mainly faces the following difficulties: (1) the body size of birds varies greatly in scale in different images and is easily affected by background factors such as lighting. (2) the shape of the bird is similar to the aircraft, which is easy to cause misdetection. (3) the presence of flying birds to cover each other, the problem of leakage is serious. (4) the current detection of the public datasets shot at a relatively close distance, which can not be applied to the detection of airport birds.

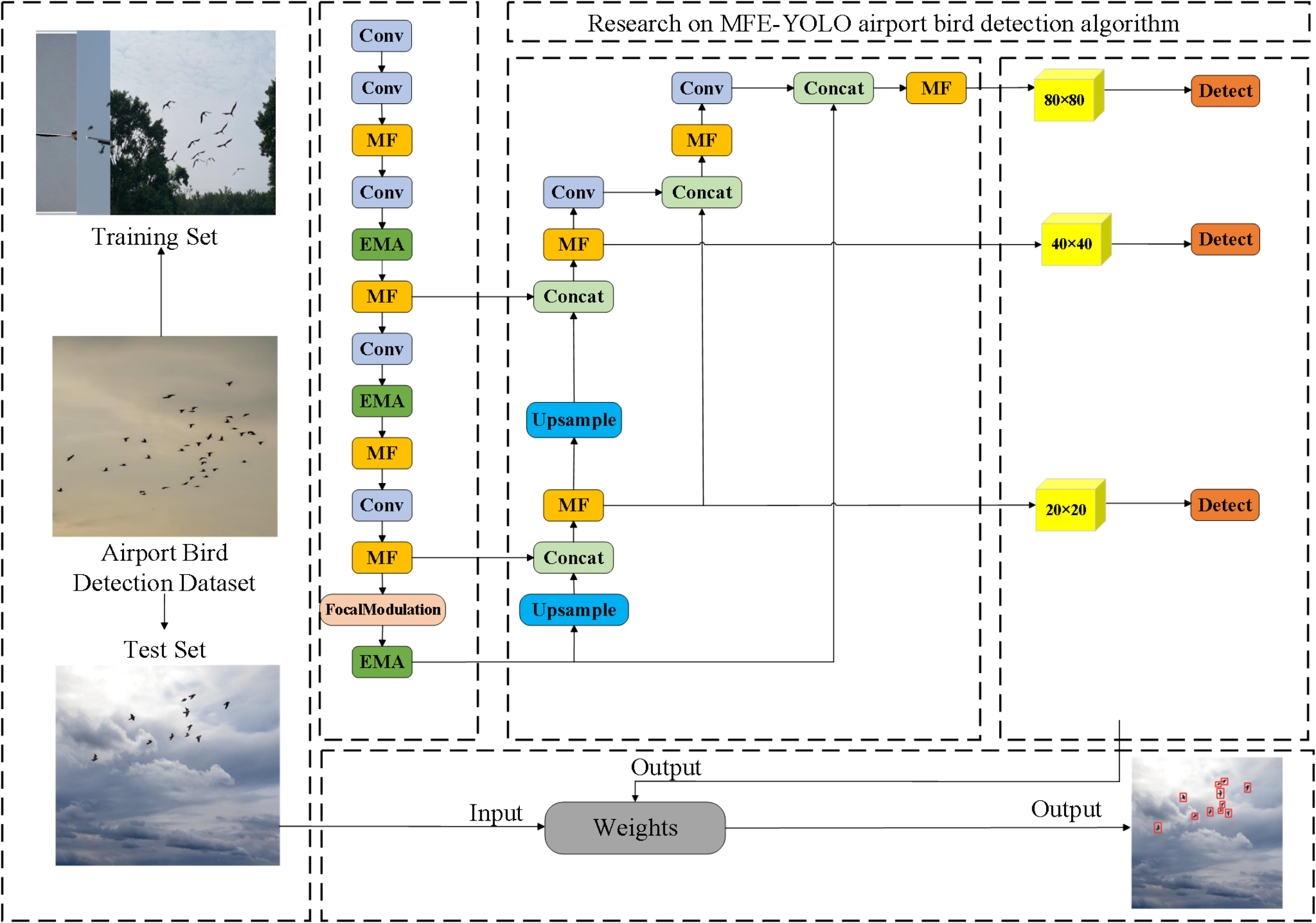

To overcome the above difficulties, this paper proposes a lightweight MFE-YOLO (Multi-Features-Efficient YOLO, MFE-YOLO) recognition network. The MF (Multi-Features, MF) module is utilized to enhance the network's ability to extract different morphological characteristics of birds and reduce the computational complexity of model parameters. The EMA (Efficient Multi-Scale Attention, EMA) mechanism improves the degree of attention to bird targets rather than aircraft. Focal-Modulation reduces the interference of environmental factors such as illumination. And the fitness of the network to bird flock samples is improved through the DCSlideLoss (Decay-Slide Loss, DCSlideLoss). Finally, the detection ability of the algorithm for birds is verified through experiments and the real-time processing ability for birds is verified through Byte Track.

The architecture of the MFE-YOLO network for airport bird detection based on deep learning is illustrated in Figure 1. First, this study introduces the MF module, which integrates dilated convolution, to replace the original C2f module in the network. This enhances the network's ability to extract multi-scale features of flying birds while effectively reducing the number of parameters and improving computational speed. Second, to mitigate the interference of environmental factors such as lighting, the original SPPF module is replaced with the Focal-Modulation module. Third, the EMA mechanism is incorporated to enhance the network's focus on bird targets. Fourth, the DCSlideLoss function is adopted during training to suppress gradients of extreme samples and increase the weight of regular samples. Finally, the Byte Track algorithm is employed to evaluate the real-time detection performance of the network, and generalization experiments are conducted using the MS COCO dataset. The following will introduce the MF module, EMA mechanism, Focal-Modulation, DCSlideLoss, Byte Track Structure and the experimental part.

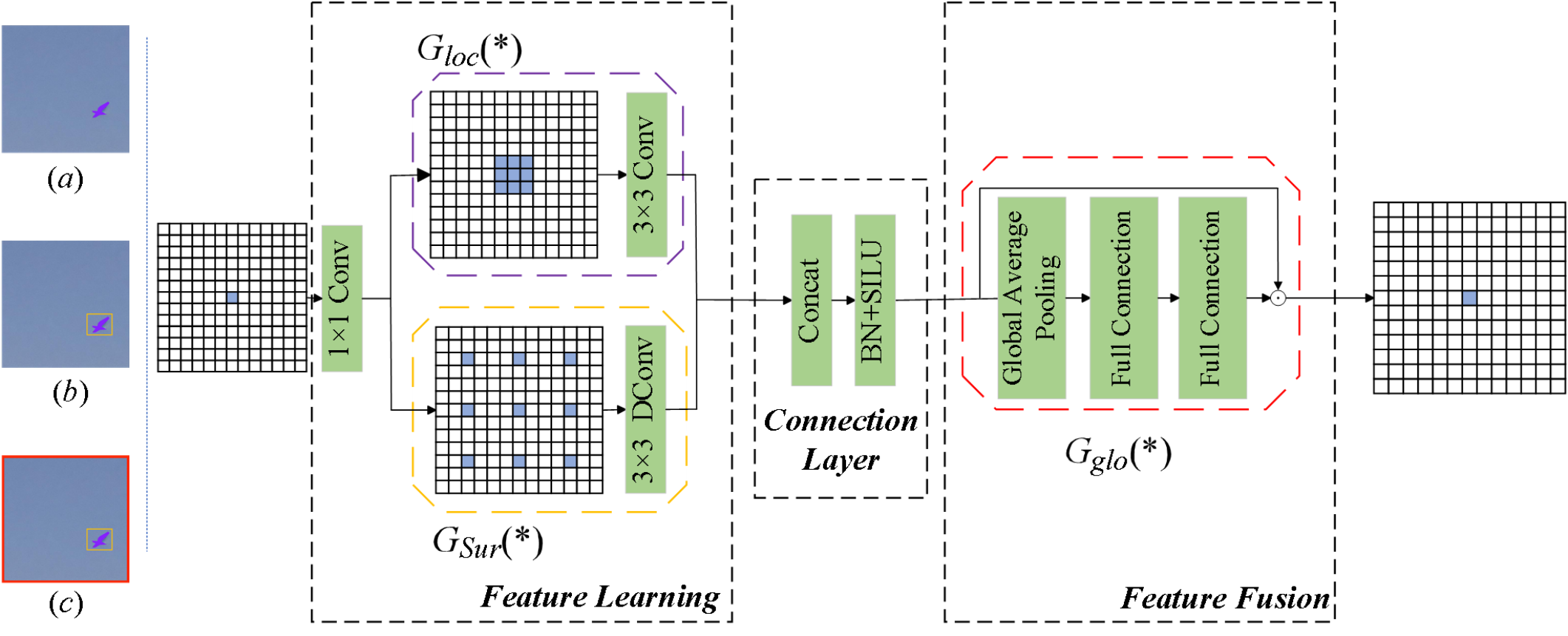

The target during bird flight is small and fewer features are obtained only by convolution stacking, in order to obtain more features for small targets, this paper proposes the MF module with reference to the CG Module structure of CG Network [12] and replaces the C2f module in the original structure with this module, This allows the network to generate a more richer feature map while also reducing the number of parameters and the network's complexity of the original structure.

As seen in Figure 2, the MF block has three primary steps: Feature Learning, Connection Layer, and Feature Fusion. While the feature improvement phase seeks to refine joint features on channels, the feature learning step concentrates on learning spatially joint features.

As shown in the Feature Learning step in Figure 2, , a local feature extractor, and , a contextual feature extractor, are the primary components of the feature learning phase. They are used to learn the local features and the surrounding environmental context, respectively. The primary function of , which is instantiated as a 3×3 conventional convolutional layer, is to learn the local features from the eight neighboring feature vectors, which correspond to the purple area in Figure 2(a). A 3×3 dilated convolution with a dilation rate of 2 is used to instantiate . The broader sensory field easily learns contextual environment characteristics, corresponding to the yellow area in Figure 2(b).

This paper uses the Batch Normalization (BN) and Activation Function (SILU) as the connectivity layer between the Feature Learning and Feature Fusion phases, the contextual environment and local feature union of and , and lastly, the output of the features to the feature fusion phase to perform the multilevel feature fusion in order to minimize the amount of computation.

As shown in the Feature Fusion step in Figure 2, the global context extractor, , is a component of the Feature Fusion section that enhances the joint features by extracting global contextual information. The red-boxed area in Figure 2(c) represents the global average pooling layer and multilayer perceptron that are instantiated as . The multilayer perceptron, which is composed of two fully-connected layers, is used to further extract the global contextual relationships after the global average pooling layer effectively aggregates the scene images. Lastly, the features are re-weighted for fusion using the extracted global contextual relationships.

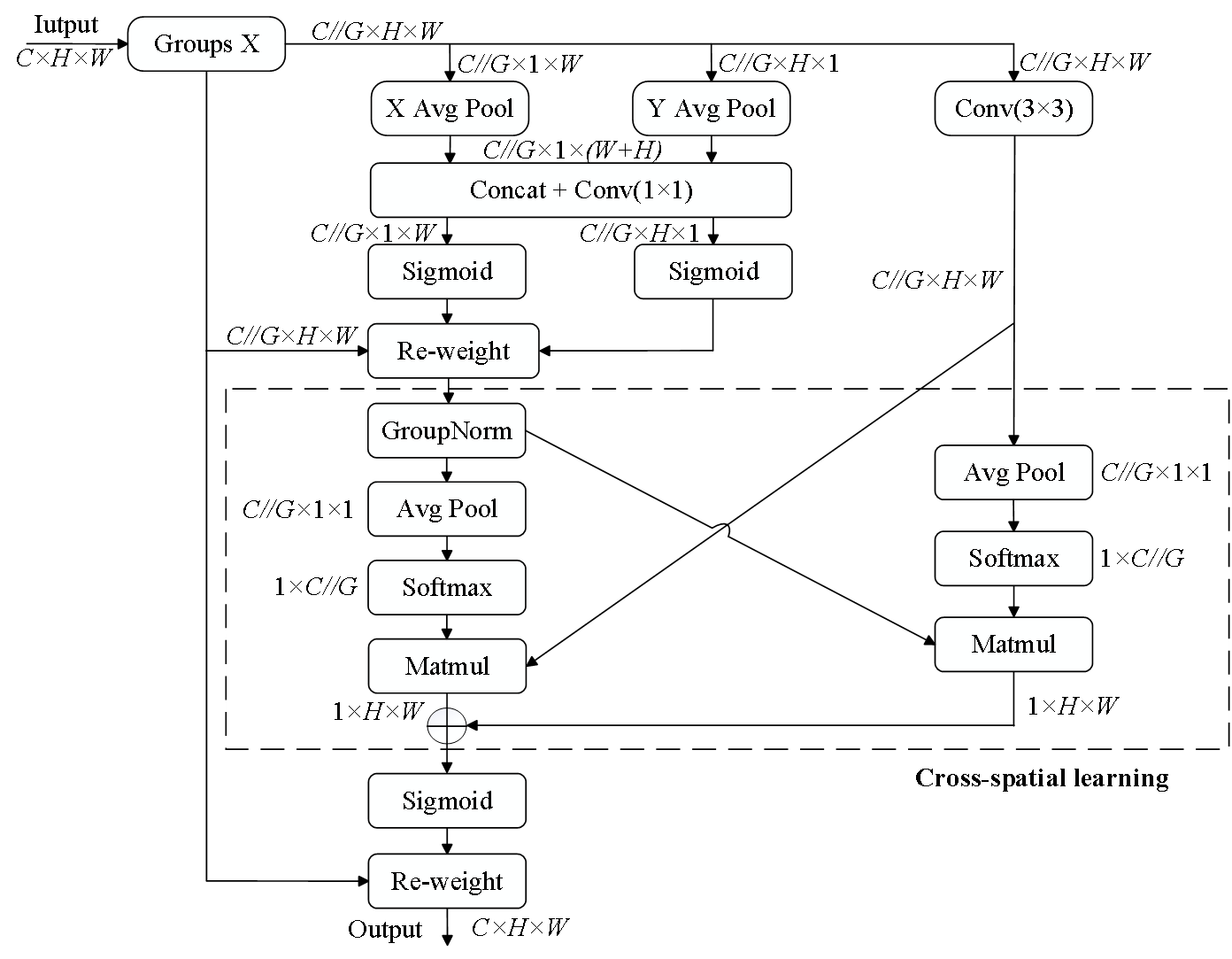

Due to the considerable variations in posture and shape exhibited by birds during flight, this paper integrates the EMA mechanism into the network architecture, as depicted in Figure 3. This integration enhances the network's feature extraction capability and improves its robustness to image transformations such as flipping and rotation, all while maintaining computational efficiency under limited resource constraints [13].

The EMA mechanism structure has three primary feature extraction routes, i.e., extracting attention weights for two 1×1 convolved paths and one 3×3 path convolution. The outputs of the two 1×1 branches and the 3×3 branch is introduced in the cross-space learning section, which models the cross-channel interaction of the information in both directions to achieve richer feature aggregation. The output of the smallest branch's channel features is then transformed into dimensional shapes that match those of the 3×3 branch, or , and the global spatial information output from the 1×1 branch is encoded using 2D global average pooling. After reweighting the input feature map using the Res-Net structure, the same dimensional feature vector is finally produced using the Sigmoid function, where represents the number of input channels, and represent the input features' height and breadth in the spatial dimension, respectively, and represents the number of channels that each sub-feature occupies.

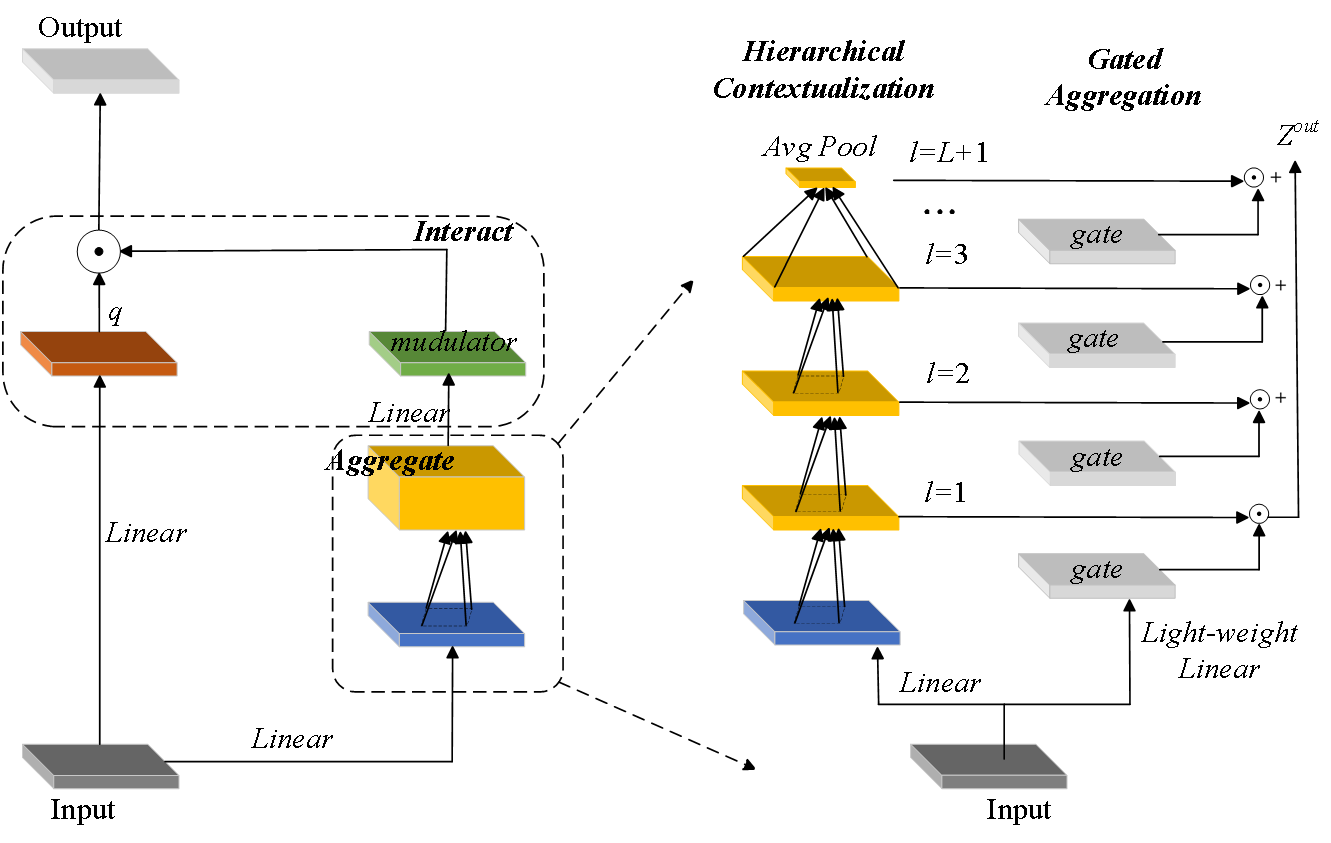

This study introduces a Focal-Modulation module, grounded in focal modulation theory [14], to further improve the model's efficiency and accuracy in addressing multi-scale variations of flying birds while mitigating background interference.

Replacing the original SPPF module, the proposed module leverages the focal modulation mechanism to emphasize salient regions within the image, thereby capturing long-range dependencies and contextual information.

This enhancement significantly increases the model's suitability for detecting small and hard-to-identify bird targets, particularly in complex background environments.

Hierarchical context extraction from local to global scope at various granularity levels and gated aggregation of the extracted context features compressed into the modulator at various granularities comprise the two parts of this module's procedure, which is seen in Figure 4.

Given an input feature , a context hierarchical representation of deep convolutional directions is obtained by projecting it into the new feature space of the linear layer, which is finally sent to the modulator. This part is passed through the linear layer to obtain spatial and level-aware weights, and then an element-by-element multiplication is performed to perform a weighted sum, finally obtaining a single feature mapping of the same size as the input .

The high-density scene as opposed to low-density or other background environment is a relatively complex region of the local pattern and texture features because of the different number of flying birds over the airport. The gap between the regions is large, and the number of simple samples is significantly greater than the number of difficult samples, making it much easier to cause duplicate identification and omission detection. As a result, the joint loss function suggested in this study is the DCSlideLoss sample weighting function, which is shown below:

where is the distribution focus loss and is the bounding box loss, which is functionally defined as:

where is the label value, is the target's label score, is a scale parameter that measures the ratio between the anchor and target frames, and are the anchor and target frames' centroids, respectively, is the Euclidean distance between the two centroids, is the diagonal distance of the smallest rectangle that covers both the anchor and target frames, is the flyer's probability score, and is the ratio of where the predicted and real frames intersect and concatenate ().

is the DCSlideLoss sample weighting function. The function is defined as follows:

This study presents the parameter as the threshold parameter of simple and difficult samples in the sample because the loss value is bigger near the threshold of difficult and simple samples. The DCSlideLoss sample weighting function uses an exponential function to weight the loss weight coefficients at the thresholds during the training process, while smoothing the loss weights of the samples near the transition thresholds, and the loss weight coefficients are shown in Figure 5.

When condition is met, indicating that the detection target is a simple sample, the weight coefficient is assigned a value of 1. Under condition , where the target lies within the critical transition zone between simple and difficult samples, to emphasize its intermediate complexity, the weight coefficient is adjusted to . In the case of condition , where the target represents a difficult sample, the weight coefficient is set to accordingly.

This paper uses the Byte Track algorithm in conjunction with the MFE-YOLO bird detection algorithm to validate the algorithm's detection ability in real-time scenarios because of the complex characteristics of flying birds, which include small targets, similar shapes, and a high overlap rate of flight processes. The block diagram of the matching strategy is displayed in Figure 6.

The exact procedure of the Byte Track algorithm is as follows: the detection targets of the high-confidence detection frame and the low-confidence detection frame are generated by first using the MFE-YOLO method for target identification in each picture frame. The Kalman filter predicts the motion trajectory of the detected target and generates the predicted trajectory frame. Next, the Hungarian algorithm is used to match the high-confidence target detection frame with the existing trajectory for the first association. For successfully matched trajectories, their Kalman filters are updated and put into the trajectory set, unmatched trajectory frames are put into the set , and unmatched detection frames are put into the set . Secondly by matching the low confidence prediction frame with the trajectory in for the second time. For successfully matched trajectories, the Kalman filter is updated and stored in the trajectory collection of the current frame, and those unsuccessful in both matches are deleted. The collection of trajectories is then produced for Kalman filtering to anticipate the new location of the trajectories. Lastly, all of the trajectories of the current frame are returned as the set of existing trajectories for the next frame of the picture.

To thoroughly evaluate the performance of the proposed model, this study utilizes a dataset comprising images of flying birds near airports, which were collected both through field photography and online sources. A total of 5,186 images were selected and divided into training, testing, and validation sets at a ratio of 7:2:1. Considering the visual similarity between birds and aircraft in real-world scenarios, 102 images containing both birds and airplanes were included in the dataset to reduce the risk of misclassification. Additionally, 72 images containing only airplanes were added as negative samples. To further assess the model's detection capability in dynamic scenes, five video clips of flying birds were also collected for training and evaluation, as illustrated in Figure 7.

Similar to most convolutional neural network-based detection methods [15, 16], this study utilizes the following evaluation metrics in the experiments: Precision (P), Recall (R), mean Average Precision (mAP), the number of Parameters (Param), and Giga Floating-point Operations (GFLOPs). For real-time detection, the Identification F1 score (IDF1), Multiple Object Tracking Accuracy (MOTA), and Multiple Object Tracking Precision (MOTP) metrics [17] are employed.

To further illustrate the robustness and excellent efficacy of the suggested approach in this article. In this paper, comparative experiments with SSD, EAANet [18], YOLOv5, YOLOv6, YOLOv7 [19], and YOLOv8 algorithms are conducted in the same configuration environment. Table 1 displays the outcomes of the experiment.

| P | R | mAP | Param (M) | GFLOPs | |

|---|---|---|---|---|---|

| SSD | 0.863 | 0.77 | 0.842 | 26 | 16.95 |

| EAANet | 0.924 | - | 0.901 | 7.1 | - |

| YOLOv5 | 0.926 | 0.82 | 0.893 | 7.3 | 16.5 |

| YOLOv6 | 0.887 | 0.72 | 0.872 | 4.63 | 11.34 |

| YOLOv7 | 0.899 | 0.82 | 0.893 | 6 | 13 |

| YOLOv8 | 0.926 | 0.82 | 0.896 | 3 | 8.1 |

| Ours | 0.949 | 0.84 | 0.91 | 2.2 | 6.1 |

It is completely shown by the comparative verification of other methods that the MFE-YOLO detection technique, which is suggested in this study, is better appropriate for detecting flying birds inside the airport and has a higher detection accuracy for flying birds above the airport. This work conducts comparison tests using the benchmark YOLOv8-Byte Track method, and ours-Byte Track algorithm to further validate the algorithm's real-time detection impact. The findings are shown in Table 2.

Experimental results demonstrate that the proposed method surpasses the benchmark network YOLOv8 combined with Byte Track in terms of detection performance. The algorithm effectively identifies flying birds in real-world airport scenarios, indicating that the MFE-YOLO framework exhibits strong stability and real-time capability in airport bird detection tasks.

| Methods | Dataset | IDF1 | MOTA | MOTP |

|---|---|---|---|---|

| YOLOv8+Byte Track | 001 | 19.7 | 11.5 | 28.02 |

| 002 | 11.3 | 5.9 | 27.06 | |

| 003 | 57.8 | 69.1 | 75.67 | |

| 004 | 6.5 | 10.8 | 24.44 | |

| 005 | 51.1 | 71.6 | 84.69 | |

| Ours+Byte Track | 001 | 63.2 | 82.6 | 49.06 |

| 002 | 36.2 | 46 | 27.04 | |

| 003 | 85.4 | 88.2 | 75.67 | |

| 004 | 7.1 | 33.8 | 47.77 | |

| 005 | 51.7 | 71.6 | 90.12 |

To further verify the generalization capability of the proposed method, localization and detection experiments were conducted on the publicly available MS COCO dataset. The proposed algorithm was compared with several state-of-the-art models, including YOLOv5, YOLOv7, YOLOv8, Faster R-CNN, and SSD. As illustrated in the Table 3, the proposed airport bird detection algorithm also demonstrates high localization and recognition performance for bird targets in natural environments.

| mAP | Param (M) | GFLOPs | |

|---|---|---|---|

| YOLOv5 | 0.568 | 7.3 | 16.5 |

| YOLOv7 | 0.528 | 6 | 13 |

| YOLOv8 | 0.526 | 3 | 8.1 |

| SSD | 0.431 | 26 | 16.95 |

| Faster R-CNN | 0.592 | 28.3 | - |

| Ours | 0.596 | 2.2 | 6.1 |

| Improvements | P | R | mAP | Param/M | GFLOPs | |||

| MF | EMA | Focal-Modulation | DCSlideLoss | |||||

| - | - | - | - | 0.926 | 0.82 | 0.896 | 3 | 8.1 |

| - | - | - | 0.915 | 0.80 | 0.884 | 2.1 | 5.8 | |

| - | - | - | 0.912 | 0.82 | 0.905 | 3 | 8.1 | |

| - | - | 0.935 | 0.80 | 0.892 | 3.1 | 8.4 | ||

| - | 0.941 | 0.81 | 0.9 | 2.2 | 6.1 | |||

| - | 0.938 | 0.82 | 0.887 | 3.1 | 8.4 | |||

| 0.946 | 0.84 | 0.91 | 2.2 | 6.1 | ||||

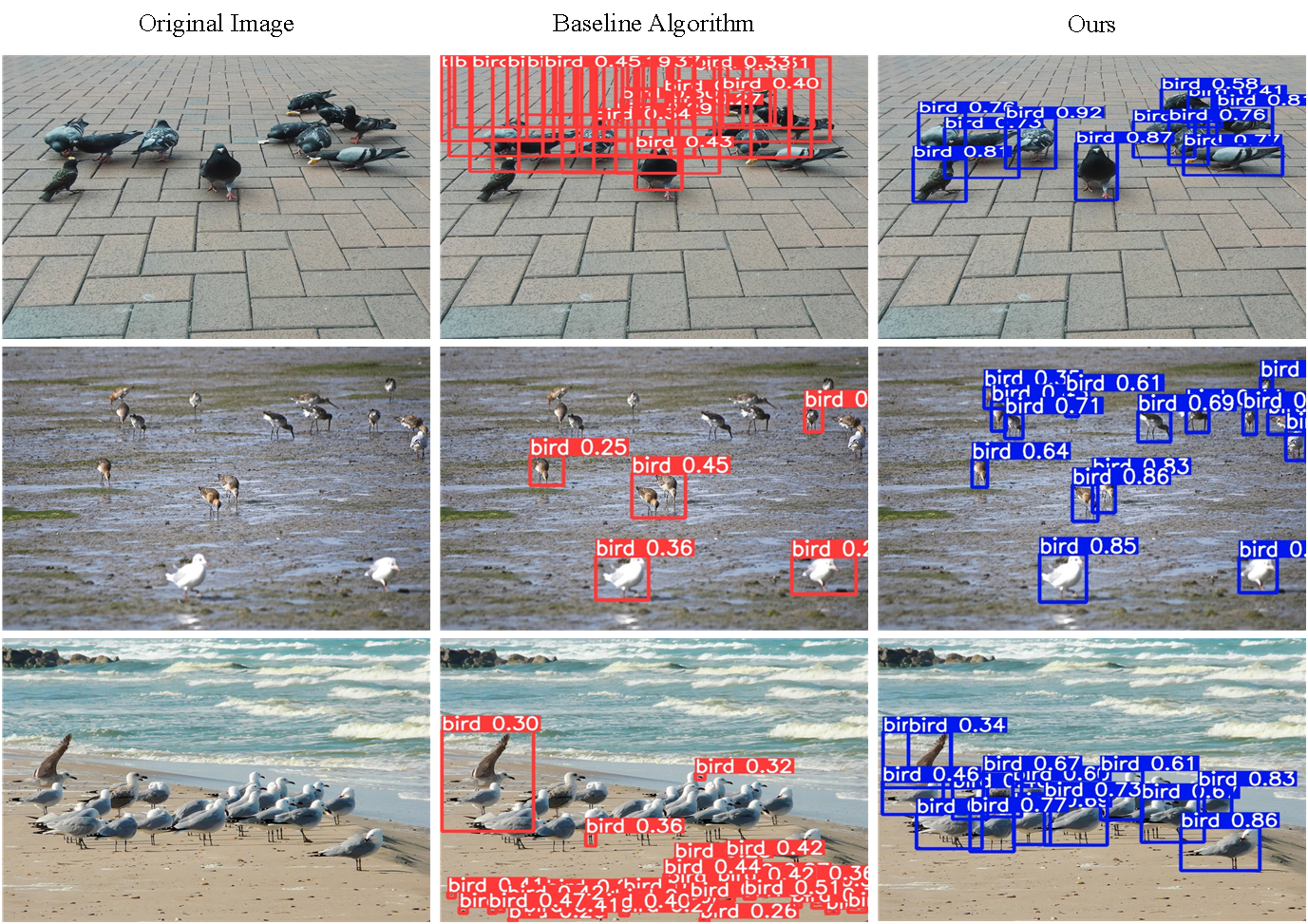

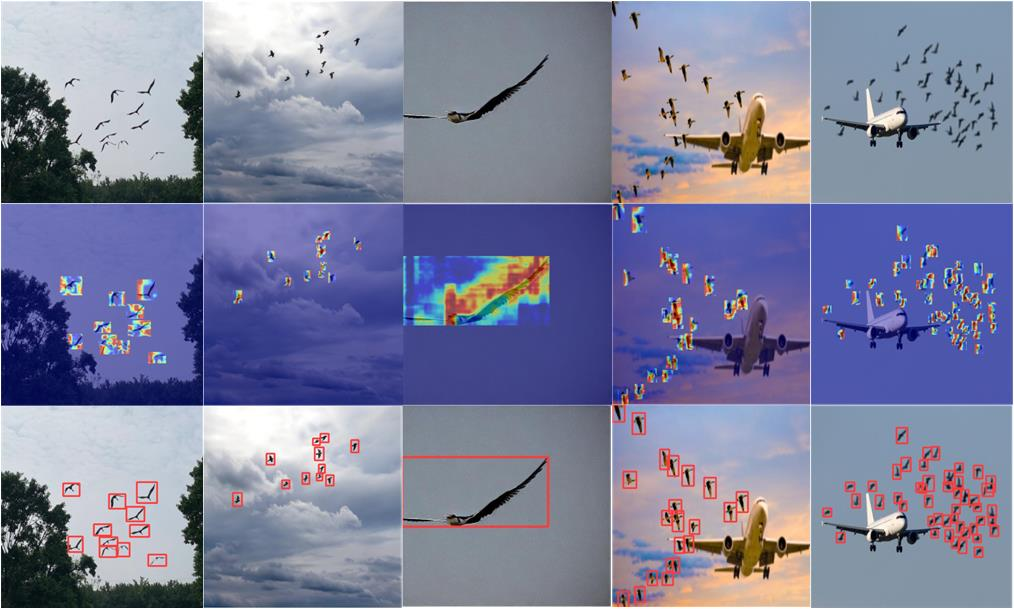

Figure 8 intuitively presents visual comparisons among the original images, baseline algorithms, the proposed method, and the corresponding results on the MS COCO dataset.

In this paper, ablation studies were systematically conducted to validate the contributions of each proposed module. As shown in Table 4, introducing the Multi-scale Fusion (MF) module alone reduces model parameters by 30% (from 3.0 M to 2.1 M) and computational cost by 28.4% (from 8.1 GFLOPs to 5.8 GFLOPs), but temporarily sacrifices detection performance (mAP drops 1.2%). The Dynamic Contextual Slide Loss (DCSlideLoss) independently improves mAP by 1.0% (0.896 → 0.905) without increasing model complexity.

The combination of EMA attention and Focal-Modulation mechanisms increases parameters by 3.3% (3.0 M → 3.1 M) and GFLOPs by 3.7% (8.1 → 8.4), yet enhances precision by 1.3% (0.926 → 0.938). When fully integrating all components (MF + EMA + Focal-Modulation + DCSlideLoss), our final model achieves 2.2% higher precision (0.949 vs 0.926), 2.4% better recall (0.84 vs 0.82), and 1.6% mAP improvement (0.910 vs 0.896) compared to the baseline YOLOv8, while maintaining 26.7% fewer parameters (2.2 M vs 3.0 M) and 24.7% lower computation (6.1 vs 8.1 GFLOPs). These results conclusively demonstrate the effectiveness of our co-design methodology for balancing efficiency and accuracy. Figure 9 shows the positioning map and heat map of the bird target predicted by the model. It vividly demonstrates that the MFE-YOLO algorithm can effectively detect bird targets in the airport scene and has good detection performance.

Furthermore, the DCSlideLoss mentioned in this paper is subjected to ablation experiments with weighted modifications using different values of . The experimental findings are shown in Figure 10. Loss value relative to the parameter different values of the curve first decreases and then increases, when when the loss of the minimum, so this paper takes .

This study addresses the safety risks of flying birds during airport aircraft takeoff and landing by proposing a more lightweight bird detection network (MFE-YOLO) for bird detection in low altitude airport locations. According to the trials, the network proposed in this research is more stable, lighter, and more accurate in identifying flying birds at airports. The MF module is used to lighten the network, and the DCSlideLoss, the Focal-Modulation module, and the EMA mechanism are introduced to enhance the model's fusing capacity to the multi-scale aspects of the flying birds. This study will further optimize and improve the model in further work by testing and analyzing the proposed method in the areas of public safety, agricultural bird damage, and UAV obstacle avoidance.

IECE Transactions on Intelligent Systematics

ISSN: 2998-3355 (Online) | ISSN: 2998-3320 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/iece/