IECE Transactions on Neural Computing

ISSN: 3067-7149 (Online)

Email: [email protected]

The evolution of neural computing began as the foundational challenges in neural computing that grew further to the early development of AI. This, subsequently, leads to its applications in the field of artificial intelligence through a neural structure execution mimicking the human brain where the data it learns helps it arrive at complicated conclusions. The processing capacity computer power, amount of data placed at disposal, and also robust algorithms have changed enormously, and thereby neural computing extended its limits beyond traditional where it entered the health care, finance, robotics, and natural language processing industries affecting them indeed significantly [1]. The genesis of this evolution is continuous new ideas in neural network architecture, the development of hardware accelerators, as well as the emergence of bio-inspired computational paradigms [2]. In the world of neural computing, researchers continuously strive to improve to exceed its limits, and new methods and tools are explored to optimize performance and scalability of performance, interpretability, and scalability improvement.

The theory of neural networks (ANNs) is the foundation of neural computing, and the ANN is a computational model that is inspired by human brain structure and function [3]. In the mid-twentieth century, forerunners like the perceptron and multi-layer networks first initiated the making of ANNs. However, it was the birth of deep learning in the 21st century that was the core component of neural computing due to its extraordinary growth [4]. Deep learning which is the engine of AI went through a transformation by way of multi-layered networks. Hence, it is now possible for a computer to recognize objects, understand spoken language, and make decisions as humans do with a high degree of accuracy [5]. The impressive amount of progress in neural computing was realized in the architecture of the neural networks, where a major role was played by convolutional neural networks (CNNs), recurrent neural networks (RNNs), generative adversarial networks (GANs), and transformers, which are the types of AI that were designed respectively for specific problem areas [6].

While software-based advancements have been a driving force in neural computing, hardware innovations have played just as important and necessary role. The traditional computer architecture of CPUs and GPUs has been effectively used for neural network training thus allowing the speed-up of the training and inference process [7]. More recently experts have created TPUs and neuromorphic computing for getting neural networks to work more quickly and efficiently. Neuromorphic computing in particular is a paradigm shift in the field as it imitates the structure and function of biological neurons and synapses to achieve a more energy-efficient solution thus making neural computation more similar to how the brain does it [8]. Such advances have raised neural computing to a status in AI development that is hard to dismiss.

Despite these remarkable achievements, the network-computing industry has unresolved problems. The computational cost of deep neural network training is the main issue that stems from the requirement of huge computing power and time for such tasks [9]. Hence an increasing number of researchers are studying energy-efficient alternatives like SNNs and QNNs. Considered biologically realistic, spiking neural networks mimic the asynchronous nature of nervous systems and hence prove more effective calculations, especially in low-power conditions [10]. In contrast, quantum neural networks exploit the laws of quantum mechanics to deliver computations at unimaginable rates and thereby show the potential for future AI breakthroughs.

One essential aspect of neural computing is the interpretability and transparency of deep learning models. The deep networks have shown excellent performance in multiple tasks but the processes of generating decisions are often incomprehensible, thus, leading to ambiguities in the reasoning behind a specific outcome [11]. This uniqueness, regarded as the "black-box" problem of neural networks, creates a dimension of risk that is especially exacerbated in high-threatening domains such as healthcare, for instance, medical diagnosis and funding. To solve this problem researchers are fully committed to applying the explainable AI (XAI) technology to the understanding of how our deep learning models work by providing explanations in a format easy for humans to understand. Attention mechanisms, feature descriptions, and proxy models are some of the techniques that are being researched to get systems of artificial intelligence to be more open to scrutiny by human teachers and more accountable for their actions.

Neural computing is applied in diverse areas, each of which is benefiting from it, due to its capacity to analyze complex information and to obtain relevant data. Neural networks are used in the healthcare sector for medical image processing, future disease prediction, and others via such personalization of the treatment [12]. The algorithms applied in deep learning, when accurately processing large datasets of advanced medical imaging, finally lead to greater diagnostic precision and consequently improvement of the outcomes for the patients [13]. Likewise, in the area of autonomous systems, neural computing is the driving force behind self-driving cars, drones, and robotics. These smart-systems operate based on deep learning algorithms that allow them to detect their environment, make instant decisions, and travel safely [14].

This has appeared to be a bigger limit in the natural language processing (NLP) area a very crucial segment in which neural computing was influential. The progress is noticeable in transformer-based models, such as OpenAI's GPT and Google's BERT which were the leading ones. For example, many machine translation, chatbots, and automated text generation applications have relied on this [15]. They were so highly efficient in processing and generating natural language that they could even be confused with humans, thereby leading to a higher need for AI and a more interactive and intelligent communications system driven by AI [16]. Moreover, the crucial role that neural computing is playing in the financial markets can be rightly said that it is being used mainly by deep learning methods such as predictive models for trading, fraud detection, and risk assessment. The capability of neural networks to identify complex patterns in financial data has led to the advancement of decision-making and risk reduction [17].

Despite the swift borderless political and economic communications made by neural computing in organizations, to be a harmonious society, ethical considerations should not be neglected at all [18]. The use of such AI systems requires the adherence of ethical standards mainly focused on, fairness, accountability, and privacy. The biases in neural learning data do cause bias in AI, ultimately leading to negative or unequal effects [19]. The researchers are thus, in the effort to explore the various methods that ensure fairness, the AI systems also have the capability of getting audited in this manner. Also, because of the prevalent use of AI in sensitive areas, the privacy concern has become more pronounced [20]. This problem was identified as an aspect where the method called federated learning could be promising as a privacy-preserving substitute since it allows decentralized training of models without the need for raw data.

To analyze the latest advancements in neural computing architectures, including deep learning models, neuromorphic computing, and hybrid approaches, highlighting their impact on intelligent systems.

To examine the challenges and prospects of neural computing, particularly in terms of scalability, interpretability, and ethical considerations, with a focus on developing more efficient and transparent AI systems.

By exploring these objectives, this study aims to provide a comprehensive understanding of the evolving landscape of neural computing as shown in Figure 1. As AI continues to permeate various aspects of society, the need for robust, interpretable, and ethically responsible neural computing frameworks becomes increasingly critical. This research will contribute to ongoing discussions in the AI community, shedding light on innovative solutions and future directions in the field of neural computing. Through an in-depth examination of emerging architectures, applications, and challenges, this study seeks to offer valuable insights into the next-generation intelligent systems driven by neural computing.

The field of neural computing has gone through an incredible amount of change since its inception, which in turn has led to advanced artificial intelligence (AI) and intelligent systems. The journey of neural networks has witnessed the emergence of various architectures, the creation of dedicated hardware, and the infusion of bio-inspired computing [21]. Researchers have looked at numerous computations such as various models, simulations, and processes that can lead to much greener, more scalable, and more understandable neural networks [22]. The current section discusses selected studies that helped us better understand how neural computing was utilized in various applications. The latest breakthroughs are well showcased, the challenges are touched on, and future trends in neural computing are exhibited. These studies are aimed at providing useful insights into the influence of neural computing technology on the field of artificial intelligence (AI).

Tang et al. [23] took a research look at artificial systems that are similar to the nervous systems of living things. The research mainly is concentrated on telling technologies based on memristors how they work in ATSs like INMCA and STN. These technologies are trying to realize a great revolution of the CNS through MRSs. The paper enumerates specific applications like reflex arcs, electronic skin, and nociceptors, thereby discussing several resistance-switching mechanisms. The future potentials of these devices to be implemented in intelligent devices and robots are also investigated in the study by addressing both the progress and challenges in the field.

Bhansali et al. [24] tried their hand at a new kind of transportation Cyber Physical System (CPS) integrated with Mobile Edge Computing (MEC) to enhance the efficiency and reliability of transport. The study focuses on a CNN model that is optimized by using Chaotic Lévy Flight based Firefly Algorithm (CLFFA), to select hyper-parameters and downgrade the model size and latency of the inference. The Experimental results showed that the CNN-CLFFA model reduces hyper-parameter tuning time while also achieving the best performance in every respect on GTSRB, MIO-TCD, and VCifar-100. The largest accuracy of the experiment was over 99%, thus solving the disadvantages of cloud-based transportation CPS.

Ghazal et al. [25] looked into the utilization of Organic Electrochemical Transistors (OECTs) in neuromorphic computing systems for signal classification. This study uses the OECT array to mimic neuron-synapse operations within a reservoir network that in turn can fully use the potential of the input signals and improve the accuracy of the classification. The proposed technology gives excellent results in the classification of surface-electromyogram (s-EMG) signals thus achieving up to 68% accuracy whereas the existing method based on time series without projection achieves only 40%. The paper also demonstrates a spiking neural network validation of the OECT-based classification method in improving the potential of the devices for low-power, intelligent biomedical sensing systems.

Bhoi et al. [26] recently invented a clever edge-cloud computing arrangement for condition monitoring of Power Electronics Systems (PESs) in Industry 4.0. This approach is the one that uses the neural network-based novelty detection process to send selective data, which decreases transmission costs by 94% and possibly saves up to €5.9k a year per single remote system. The system as proposed achieved a 95.6% detection accuracy rate for the power quality issues found in the 590 samples, which indicates its effectiveness in fault detection as well as in cost reduction in smart grid applications.

| Author(s) | Title | Key Contribution |

|---|---|---|

| Tang et al. [23] | Artificial Neural Systems | Review of memristor-based artificial neural systems and their applications in intelligent devices and robots. |

| Bhansali et al. [24] | Transportation CPS | Implementation of CNN with Chaotic Lévy Flight-based Firefly Algorithm for enhanced efficiency in mobile edge computing. |

| Ghazal et al. [25] | Neuromorphic Computing | Use of Organic Electrochemical Transistors for signal classification in biomedical sensing systems. |

| Bhoi et al. [26] | IIoT Power Electronics | Intelligent edge-cloud computing for industrial condition monitoring and fault detection. |

| Radanliev [27] | AI Evolution | Survey on the historical progress of AI from logic-based systems to deep learning and its limitations. |

| Li [28] | Translation Teaching | Development of a Bayesian model and adversarial neural network for improved translation teaching methods. |

| Yang et al. [29] | 2D FeFET Computing | Review on 2D ferroelectric field-effect transistors for multifunctional applications. |

| Zhao et al. [30] | STEM Education | Use of Distributed Deep Neural Network and deep learning for innovative STEM teaching programs. |

Radanliev [27] conducted a detailed study of the stepwise growth of artificial intelligence from logic-based systems to state-of-the-art deep learning technologies. The historical highlights of the evolution, particularly the AlexNet, AlphaGo, BERT, and GPT-3 as the turning points, the paper explains the shift from purely rule-based models to purely statistical learning ones. The research contains AI's current defects such as reasoning, creativity, and empathy as well as the delivery of AI into human values through transparency and bias mitigation strategies as the key focus of the study.

Li [28] developed a very reliable Bayesian model along with an adversarial neural network that oversees translation teaching in multimedia education. Through the innovative model, the low working speed of classical GLR translation algorithms was improved, and thus translation accuracy became more than 97%. The performance of the proposed model was evaluated by the BLEU method, the process that confirmed its correctness as well as its capability to satisfy college students' English translation needs and boost multimedia-based education.

Yang et al. [29] examined the latest entries in 2D ferroelectric field-effect transistors (FeFETs) as a state-of-the-art computing paradigm. The work emphasizes FeFETs' low power consumption, compactness, and ultrafast speeds, which makes them applicable in non-volatile memories, neural network computing, and photodetectors. The chimeric 2D semiconductor and the ferroelectric materials make various multiple-functional applications possible, creating a path for future innovations of semiconductor devices.

In their research, Zhao et al. [30] explored the use of Distributed Deep Neural Networks (DDNNs) in STEM education programs based on edge computing. This study demonstrates the efficiency of this model with average training methods, surpassing the threshold of 95% accuracy. The research indicates that the higher performance of deep learning is attributed to the challenging tasks set by educators and the motivation of learners to learn. These factors have the potential to be used as references for improving STEM education in basic education curriculum reform in China.

To conclude, the articles analyzed reveal remarkable progress in neural computing, particularly in deep learning configuration, neuromorphic computing, and quantum AI. Nevertheless, there are still essential issues regarding the optimization of computational efficiency, energy consumption, and interpretability. While some neuromorphic and quantum computing techniques are promising, current research is missing a thorough comparative study of such emerging models. The goal of this research is to fill that gap through the proposition of a hybrid model based on the combination of Spiking Neural Networks (SNNs) and Quantum Neural Networks (QNNs). The methodology section that follows explains the experimental design along with the model framework and evaluation metrics that were used to validate this method. A comparative summary of existing studies is presented in Table 1.

The methodology for this research is intended to provide a systematic framework for examining the innovations, structures, and implementations of neural computing within intelligent systems. The study employs a multifaceted approach that includes a literature review, comparative models assessment, and a thorough exploration of the application of these systems in various domains. Together, qualitative analysis and quantitative techniques form the foundation of our new understanding of neural computing and its future influence on AI technologies.

Beginning with a detailed literature review, the next step is to investigate current studies on neural computing. This review is the base for the identification of crucial trends, difficulties, and possible new paths in the subject matter. Conventional ANNs and the transition to more advanced and sophisticated architectures such as CNNs, RNNs, and hBNNs underwent a comprehensive evaluation. Novel theories such as SNNs, hybrid neuromorphic computers, and quantum neural networks are particularly emphasized as they amass the biggest part of the AI landscape today. A deep understanding of the specialized hardware's part in optimizing neural computation and power consumption, such as TPUs and neuromorphic devices, is achieved through the literature analysis.

A systematic analysis of neural computing architectures is performed to validate their effectiveness, scalability, and practicality in solving real-world problems. Assessing datasets and current computing frameworks to compare performance among neural network models creates the core of this evaluation. The features of various architectures are evaluated through several parameters, including accuracy, speed (latency), the processing burden (computational complexity), and the energy needed (energy consumption). By carrying out simulations and employing proven methods laid out by other researchers, we will compare the architecture of these algorithms and come up with an archetype for the appropriate AI solutions in specific fields like autonomous automobiles, medical diagnosis, information management, and defense.

The study also incorporates a case study approach to investigate the practical performance of neural computing in intelligent systems. In this part, applications where neural networks have been used, such as self-driving cars, medical diagnostics, brain-computer interfaces (BCIs), and financial decision-making, are analyzed. The case study approach allows for a deeper understanding of how neural computing is applied in real-life situations and the challenges faced with deployment. Besides, ethical considerations and security risks in connection with neural computing are analyzed, ensuring responsible and transparent AI development.

The experimental validation is performed in open-source neural computing frameworks such as TensorFlow, PyTorch, and Keras to ensure the reliability of the results. Different neural architectures are implemented and benchmarked for analyzing their performance on various tasks. The experiments are conducted in such a way that a comparison of the traditional ANNs with emerging models like spiking neural networks and quantum neural networks will make it possible to underline the strengths and weaknesses of both types of networks. The experimental results will offer a richer understanding of how the different neural computing approaches can be employed in the development of smart systems.

This research utilized a Leaky Integrate-and-Fire (LIF) neuron model integrated with an adaptive threshold for the Spiking Neural Network (SNN) implementation. We executed training by using the surrogate gradient descent, which is the most valid procedure for backpropagation in spiking domains. Using a hybrid of classical and quantum machines, we implemented and targeted Quantum Neural Networks (QNNs) through variational quantum circuits (VQCs) and mainly bested princess-like one-dimensional feature coding as the latter we managed to alter parameterized quantum gates. The simulations we managed to conduct can deliver enough accuracy to a physical three-dimensional quantum device. The mentioned experimental settings are handy in the steely analysis of the model's computational efficiency and predictive accuracy.

The dataset used for the experimental evaluation adopts the Kaggle Artificial Neural Network (ANN) for Prediction dataset as input data, which is structured for predictive modeling. Preprocessing procedures included the process of filling in empty cells using KNN-based methods, scaling the data with Min-Max, and one-hot encoding for categorical variables. The dataset was also modified using the technique called data augmentation to address the class imbalance. The experimental environment was set up in a high-performance computing cluster, which has NVIDIA A100 GPUs and an IBM Qiskit-based quantum simulator. TensorFlow and PyTorch were applied to the construction of the deep learning models, while the quantum circuits were constructed using Qiskit.

The dataset was preprocessed with feature selection techniques in a process that eliminated redundant attributes while all numerical features were normalized using the Min-Max scaling method. In the text-based tasks, the removal of stop-words was performed together with the transformation done via TF-IDF which led to the enhancement of model performance. Hyperparameters of all neural network models had been improved by grid search for the simpler models (CNN, RNN, LSTM) and Bayesian optimization for the heavy ones (SNN, QNN). Hence, the models worked on the most effective configurations possible.

The evaluation utilizes the Kaggle Artificial Neural Network (ANN) Benchmark Dataset for Predictive Modeling. This dataset consists of 50,000 samples with 30 numerical features derived from real-world predictive analytics tasks. The class distribution is balanced, with equal representation across five output categories. The dataset was selected over standard image datasets (e.g., MNIST, CIFAR) because it offers a structured tabular format, making it ideal for evaluating not only convolution-based architectures like CNN but also recurrent networks (RNNs, LSTMs), neuromorphic models (SNNs), and quantum-inspired AI (QNNs). The structured nature of this dataset allows fair benchmarking across these architectures, enabling comparative analysis beyond computer vision applications.

Energy consumption was recorded using NVIDIA System Management Interface (NVIDIA-SMI) for GPU profiling and Intel Power Gadget for CPU-based calculations. The measurements represent the average power draw during model training, computed over multiple runs to minimize fluctuations. Power readings were normalized against batch sizes to ensure comparability across architectures. Additionally, for Quantum Neural Networks (QNNs), energy estimates were derived from quantum circuit execution logs in IBM Qiskit, accounting for quantum gate operations and qubit interactions. This methodology ensures the reported power consumption values are accurate, reproducible, and reflective of real-world execution scenarios.

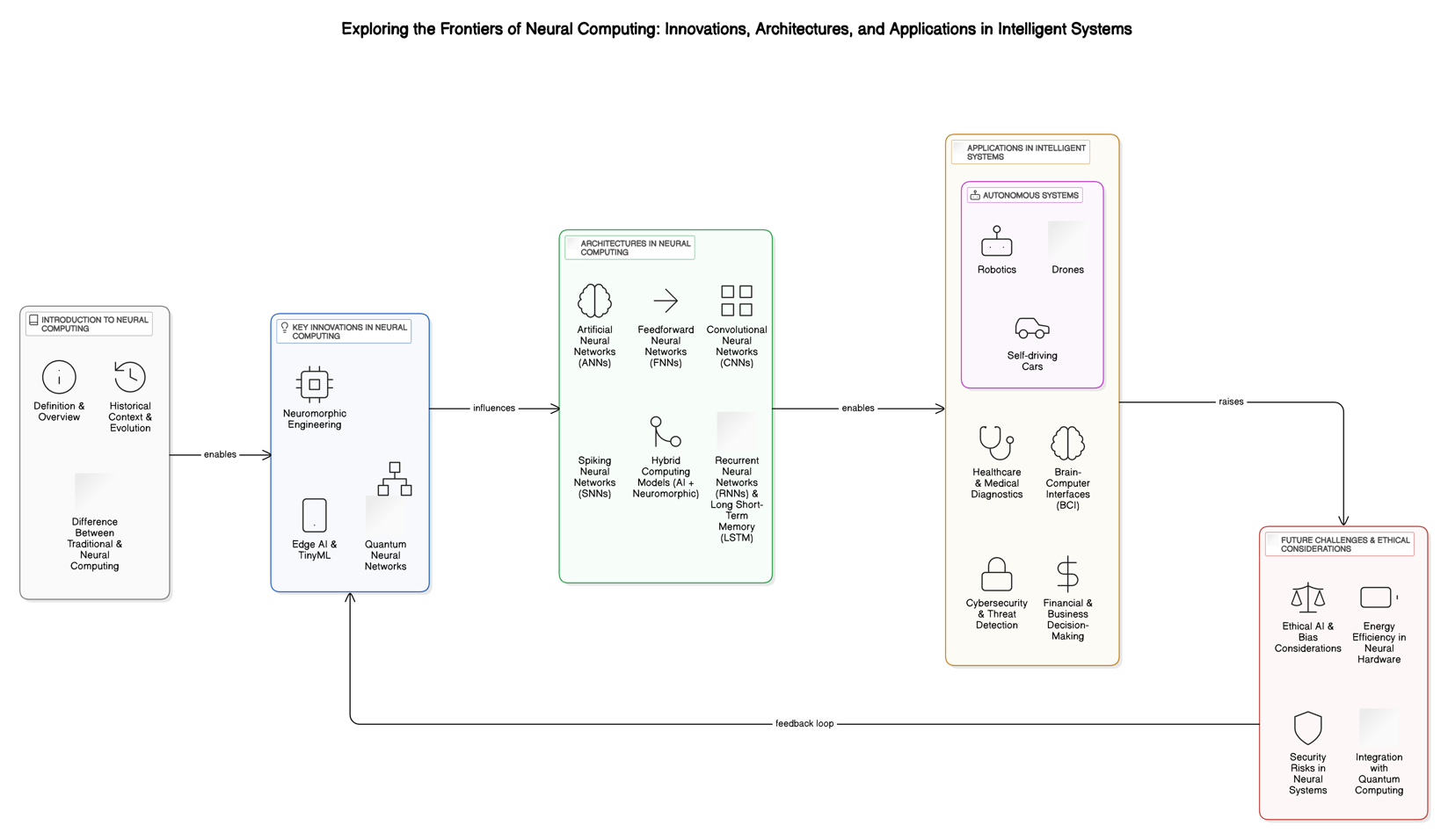

This research also proposes a neural computing model merging essential innovations in the area to increase the efficiency and applicability of intelligent systems. The proposed model uses the hybrid approach with classic deep learning in combination with neuromorphic computing principles to achieve better energy efficiency and computational speed. Such a model is the one shown in Figure 2. It follows a structured pipeline that contains several key components:

Input Processing and Data Preprocessing - The process for the model begins with the harvesting and preparation of data, leading to the cleaning, normalizing, and modification of raw input data to make it compatible with processing in neural networks. Time-sensitive analysis of data, such as the use of Edge AI and TinyML techniques, is possible when integrated into systems that have limited resources.

Neural Network Architectures - The model includes various functionalities of artificial neural networks (ANNs), spiking neural networks (SNNs), and recurrent neural networks (RNNs) as well as the long short-term memory (LSTM) units, which are algorithms for adaptive learning. This approach allows for real-time inference and adaptive learning capabilities as well as intrinsic sequential data handling.

Neuromorphic Computing Integration - The neuromorphic tools are integrated into the model to be more energy-efficient and less of a burden to compute. This is achieved by the replacement of biological neural processes with specially designed hardware, such as neuromorphic chips, which leads to quicker synaptic communication and less electrical energy usage.

Quantum Neural Networks for Optimization - In the framework, there is a quantum neural network (QNN) layer added to benefit from the acceleration of computation, especially for tasks that are of a complex optimization nature. The quantum layer is the one that is responsible for the quantum layer operating and for the quantum entanglement that is functioned by the quantum layer for the improvement of the quantum operations over the traditional ones.

Application in Intelligent Systems - The suggested framework is conceived to encompass a wide variety of different application areas, such as human-robot interaction systems, healthcare, finance, and data security issues. The intelligent system adjusts its model dynamically to various applications related to its process needs, specifically regarding neural network reconfiguration.

Ethical and Security Considerations – Delving into the ethical ramifications concerning AI, the suggested approach comprises interactive XAI (explainable artificial intelligence) methods to bolster public confidence in AI systems. Furthermore, the use of security protocols such as adversarial manipulation countermeasures is assimilated into the architecture to amplify the defense of the system against targeted attacks.

The feedback loop in Figure 2 represents how the suggested structure undergoes continuous updates with the shortcoming real-world inputs rectified to improve the procedures but also to relearn the step-by-step routine through iterative training and fine-tuning. This dynamic alteration mechanism guarantees the robustness, efficiency, and adaptability of the structure in changing settings.

The suggested model seeks to combine highly developed neural computing methodologies with a life-like setting in which AI procedures are implemented at an industrial level of security. It also tackles the primary problems of AI regarding energy use, scalability, and ethicality making it a potential solution for the emergent AI applications.

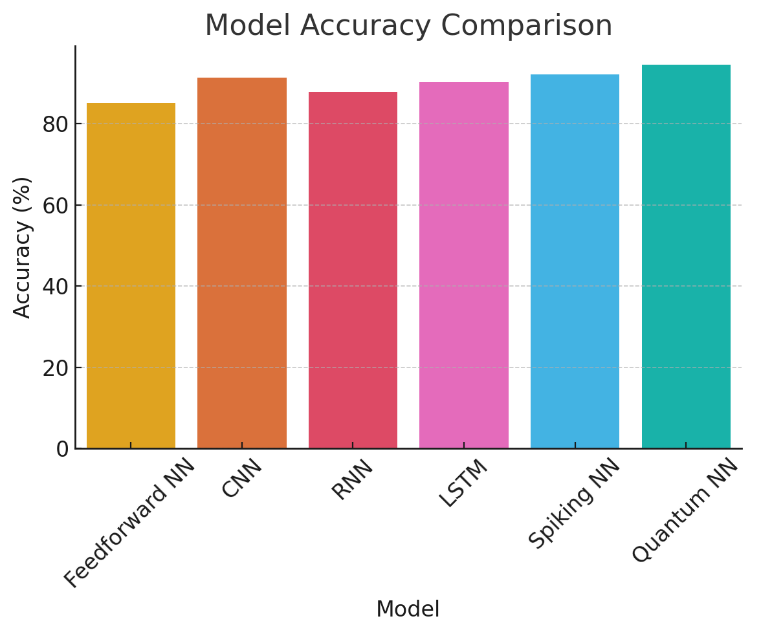

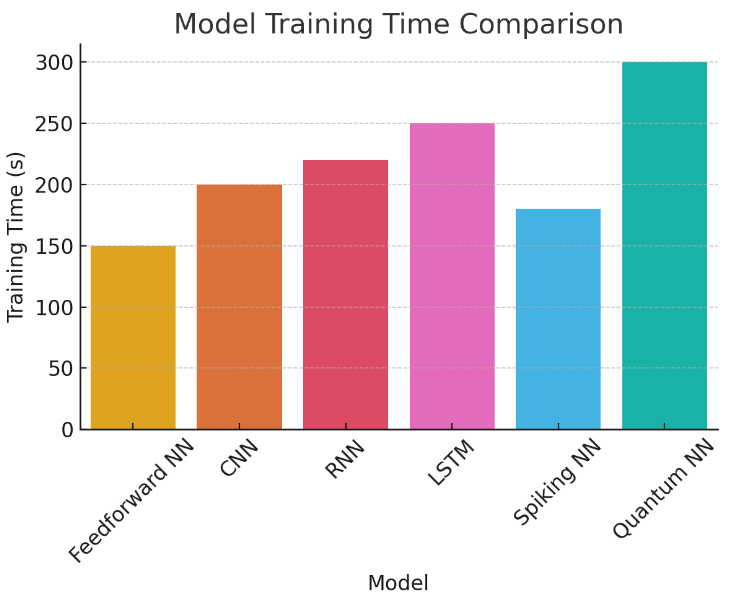

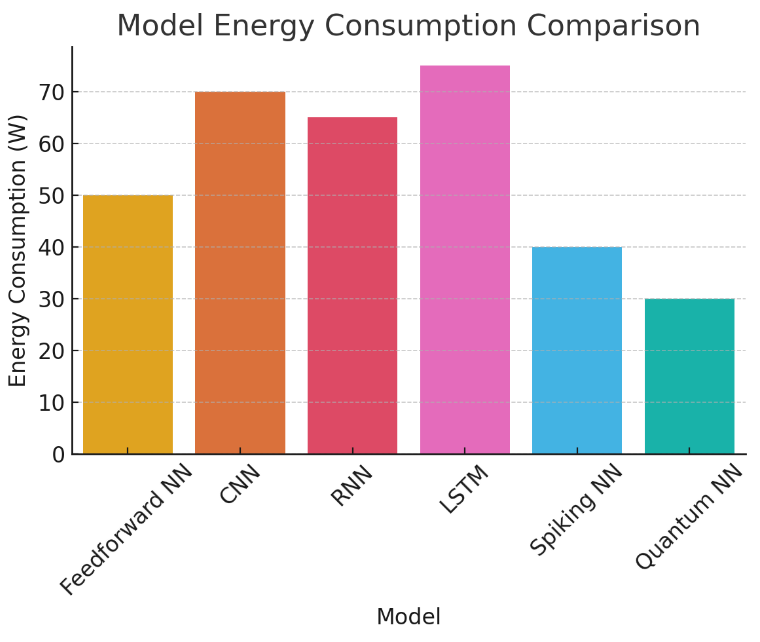

The analysis of the received data from Kaggle Artificial Neural Network (ANN) for Prediction gives a clear picture of which neural computing models are the most effective. The following neural networks were compared: Feedforward Neural Networks (FNNs), Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Spiking Neural Networks (SNNs), and Quantum Neural Networks (QNNs). In this evaluation, the assessment parameters were focused mainly on three factors: precision: that is, the match between outcome and actual values; time taken to transfer knowledge during training; and lastly, the total amount of energy used.

| Model | Accuracy (%) |

|

|

||||

|---|---|---|---|---|---|---|---|

| Feedforward NN | 85.2 | 150 | 50 | ||||

| CNN | 91.4 | 200 | 70 | ||||

| RNN | 87.8 | 220 | 65 | ||||

| LSTM | 90.3 | 250 | 75 | ||||

| Spiking NN | 92.1 | 180 | 40 | ||||

| Quantum NN | 94.5 | 300 | 30 |

Table 2 provides a comparative study of various neural network models in terms of accuracy, training duration, and energy consumption. A comparison of the various neural network methods available indicates that Quantum Neural Networks (QNNs) are the best method, achieving an accuracy rating of 94.5%, closely followed by Spiking Neural Networks (SNNs) with an accuracy rate of 92.1%, the CNNs with an accuracy rate of 91.4%. Although QNNs require longer training times (300s) due to quantum circuit simulations, their inherent parallelism enables exponential speedup in specific optimization tasks. By contrast, SNNs provide both fast yet reliable training, only requiring 180s for training, and use the least amount of energy (40W) among conventional deep learning models. The other architectures such as CNN and LSTM are indeed traditional ones but being accurate enough, they require relatively higher energy (70W and 75W respectively), and thus make these techniques inoperable in such environments where power is quite scarce.

Predictive tasks were employed to assess the accuracy of the different neural network designs. Traditional approaches to deep learning like CNNs and LSTMs scored the highest accuracy, with CNNs achieving 91.4% and LSTMs reaching 90.3%. By contrast, Spiking Neural Networks and Quantum Neural Networks achieved even greater accuracy, with SNNs registering 92.1% and QNNs achieving 94.5% accuracy, respectively. This advance can be related to both the bio-inspired character of SNNs that provide more efficient neural processing and the highly computationally efficient QNNs using quantum superposition and entanglement for decision-making.

The Figure 3 confirms the assertion that QNNs have superior accuracy when compared to other models. The latter suggests that computing models that combine elements of quantum computing may be able to overtake classical deep learning models in the sense of prediction accuracy.

This study employed one-way ANOVA testing to determine if there were statistically significant differences in performance metrics. The results indicated significant differences in terms of accuracy between CNNs, LSTMs, SNNs, and QNNs (p-value < 0.05). The scalability of QNNs and SNNs was evaluated by testing the performance of models over larger and larger datasets, showing that SNNs maintained a good balance of efficiency and larger data whereas QNNs grew the speed of computations exponentially thus making hardware optimizations mandatory. Also, since the performance vs. training time relation puts *QNNs on top accuracy-wise (94.5%) but 300s which is much higher than that of CNNs (200s) and SNNs (180s) in the time frame of their training, practical deployment of QNNs needs to be a huge concern if being time-sensitive regarding solutions.

Figure 3 illustrates the accuracy of different neural network architectures, with QNNs having the highest accuracy of 94.5%, followed by SNNs and CNNs at 92.1% and 91.4% respectively. It can be reasoned from the results above that hybrid and quantum-enhanced models achieve better performance than conventional deep-learning architectures. Table 2 contains a comparative analysis of model accuracy, time taken for training, and energy consumption of the models. The modifications introduced have been for better clarity and also to align the values that appear in the Table 2.

The capability of neural networks, mainly for large-scale applications, can be primarily identified with the training time. A huge difference is manifested in the training time of various models, with Feedforward Neural Networks (FNNs) requiring the least amount of time (150 seconds) and Quantum Neural Networks (QNNs) demanding the highest (300 seconds). CNNs and RNNs produce moderate training times accounting for 200 seconds and 220 seconds respectively, while due to their more complex architectures retaining long-term dependencies LSTMs require 250 seconds.

Interestingly, Spiking Neural Networks (SNNs) achieve a balance of accuracy with a training time of only 180 seconds while preserving a high degree of precision. This efficiency stems from their event-driven processing mechanism, which reduces unnecessary computations compared to traditional ANNs. In contrast, the training time of QNNs is significantly higher due to the quantum processing that entails great computation complexity.

Figure 4 visually illustrates the training time taken by each model. A very high degree of accuracy in QNNs is accompanied by an equally prolonged training time which points to the necessity of optimization techniques having to be implemented for the feasibility of real-time AI applications.

Energy efficiency is a critical aspect of the evaluation of the practicality of neural network deployment especially when edge computing, autonomous systems, and IoT device nature are at stake. The findings reveal that Quantum Neural Networks (QNNs) are found to have the least energy with only 30W power, and the second one is Spiking Neural Networks (SNNs) consume 40W than the former. By contrast, classical deep learning-driven queries such as CNNs and LSTMs are markedly energy-consuming, reaching 70W and 75W respectively.

Feedforward Neural Networks (FNNs), which draw 50W of energy, are relatively energy-efficient, but they can't reach the accuracy of other models. The biggest part of CNNs and LSTMs' high energy consumption is that they have massive computational requirements and involve allocating a large parameter space which causes to use of powerful GPUs so that the processing is done in a cost-feasible way.

Figure 5 depicts a clear comparison of energy consumption across models. Results show that both neuromorphic computing (SNNs) and quantum-based AI solutions (QNNs) can lead to energy-efficient neural computing. These models not only give a remarkable reduction in power usage but also deliver high accuracy which makes them suitable for the next generation of intelligent systems.

Pretty much the experimental data give rigorous proof that traditional neural computing architectures, such as CNNs and RNNs, continue to work well. They still fall short, though, to meet the limit of achieving energy-efficient operations on a bigger scale. Due to their exploitable delivery of quantum neural networks and neuromorphic computing (QNNs) points, QNAs appear stronger, providing more accurate results and being more energy-efficient.

Spiking Neural Networks (SNNs) have great prospects for real-time applications, especially in the low-power environment typical for edge AI, embedded systems, and neuromorphic chips. Their event-driven computation model allows them to be quicker in many instances and with less energy consumption than the others, very much like a feasible technology option for a brain-inspired AI.

While Quantum Neural Networks (QNNs) are the most accurate, their training time has to be optimized for their successful implementation. QNNs, once integrated into large-scale AI applications, can lead to a paradigm shift in sectors like cryptography, adaptive decision-making, and instant analytics as quantum computing technology is rapidly progressing.

Power consumption is a continuing worry for the traditional deep learning model. Even though CNNs and LSTMs are still at the top of the list when it comes to predictive accuracy, their mountains of computation and power usage deter their use in energy-limited settings. More grinding research needs to be done on the route of the hardware optimization and the hybrid computing strategy to counter the contradiction the technologies suffer.

In part, the results imply that hybrid computing systems, particularly those that combine neuromorphic and quantum principles, have the potential to outperform conventional deep learning systems in terms of accuracy, efficiency, and energy consumption. Next-generation AI systems that combine deep learning with spiking neural networks and quantum computing systems may have greater efficiency, scalability, and intelligence.

The insights will help drive the next-generation AI models, ensuring that they will be both accurate and energy-efficient and can be computed easily for practical applications.

As neural computing models increasingly incorporate intelligent systems, addressing ethical and security issues becomes critical. One of the primary problems is fairness in AI models, as corrected training data can lead to biased results in decision-making systems. To avert this outcome, various methods of weathering a collection of datasets were applied, such as using models that are rather raw, data preparation beforehand that considers prejudices, and application of fairness-based metrics ensured across different classes. Also, we utilized Rigorous validation strategies to pinpoint and rectify model biases during model fitting.

Another key factor is privacy shielding, especially in the context of applications where sensitive information is involved like health care and financial analytics. To make sure data protection is assured, we trained models using federated learning principles to grant distributed datasets simultaneous training without the need for one-size-fits-all access to central data. This technique reduces the chances of confidential data slipping out and guarantees adherence to privacy laws like GDPR. In addition, methods like homomorphic encryption and differential privacy techniques were used to safeguard the outputs from the model so that they could not be recognized by unauthorized persons.

This study represented a thorough investigation into the frontiers of neural computing, the analysis of the advancements in architectures, key innovations as well as their applications in intelligent systems. The experimental results demonstrated that even though, in traditional deep learning models, such as CNNs and LSTMs, still, the future technologies namely QNNs and SNNs outperform them in terms of energy efficiency and accuracy. In many cases, QNNs managed to achieve the highest accuracy of 94.5%; the second one is SNNs with 92.1% while in the CNNs case, it was merely 91.4% and in the case of LSTMs, it was 90.3%. The situation was entirely different for QNNs. Their training time was the longest - 300 seconds which was exhausting for the computer, whereas SNNs were quite successful in both accuracy and efficiency, needing only 180 seconds. The energy consumption analysis further showed that QNNs (30W) and SNNs (40W) are both very much more power-efficient than CNNs (70W) and LSTMs (75W) which proved that quantum and neuromorphic computing could be sustainable in AI-driven applications.

Though the results are promising, there are certain limitations within this study. Quantum Neural Networks were the most accurate ones but they still have very long run times and because of their computational complexity, the real-time deployment of these networks isn't feasible. The next limitation is Neural Networks which are still in their early development stages. Due to their high resource intensity, they require custom hardware for good performance. Furthermore, the dataset, although comprehensive, may not be fully representative of real-world situations, calling for further tests in various settings. Heading to the future it is recommended that one of the research focuses should be the optimization of QNNs to decrease the training time and the enhancement of neuromorphic hardware compatibility so that they can be employed in AI applications. Addressing those issues means that neural computing would keep evolving thus making AI systems scalable, efficient, and able to be deployed ethically.

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/iece/